Raytracing is a great technique to render photo-realistic lighting, reflections and even refractions and caustics. But with ever-increasing rendering resolutions, we’ve never really been able to do any great-looking full-scene raytracing in real-time and a capacity that is ready for consumer video games and other applications. Raytracing just involves shooting insane amounts of virtual photons into a scene and finding out how they move, which, for complex scenes and high resolutions, overwhelms and consumer computer hardware built to this date. The next Generation of Video game consoles, Playstation 5 and XBOX One Series X are going to include dedicated ray-tracing hardware. Does that mean, we can now ray-trace everything? No, unfortunately it does not, but here is why hardware raytracing is still awesome.

Let’s have a look at the history of rendering hardware/graphics accelerators (that we now call GPUs).

In the early 90s, even fast-paced video games Like the iconic Doom were rendered in software. This means that there was no dedicated hardware for rendering 3D graphics and all computationally intensive and repetitive calculations like ungodly amounts of rasterizations and interpolations that were done by the computer CPU. (Once could say that Doom wasn’t even really 3D, because it wasn’t possible to look up and down, due to the unique rendering techniques involved.) CPUs are great at doing any kind of operation, but, when doing a lot of it, it’s always faster to do particular tasks using dedicated accelerator hardware. GPUs were introduced to take over the tasks the CPUs struggled at when rendering computer graphics, due to the sheer amount of calculations, and put them in dedicated hardware. Initially, that hardware was only-fixed function, which means that their pipeline was was only configurable rather than programmable. But in the mid-2000s, programmable GPUs became a thing and suddenly, developers could go crazy and tell the GPU exactly what they wanted to do, while still taking advantage of dedicated hardware that took care of commonly required tasks like vertex transforms at blazing speeds. Vertex and Pixel shaders became a thing and allowed granular control over how the geometry and surfaces or 3-dimensional objects should behave.

In the late 2000s, we moved over to GPGPUs (General Purpose GPUs). Instead of some programmable stages in the pipeline, we now had unified hardware that was in charge of all of the programmable stages while still accelerating particular calculations commonly used in computer graphics. People suddenly had supercomputers with hundreds of cores in their house! But you can’t do everything using the programmable pipeline. To create computer graphics, one needs to be aware of the hardware that’s available and use it. While you can, technically, render an entire world only in a pixel shader, it still insanely slow and very limited in terms of the data that can be processed to generate that world. The common approach for real-time graphics is to make use of the vertex steps in the pipeline to determine the area to draw on and then fill that area in some way using a pixel shader.

Even deferred rendering pipelines that make more use of post-processing (by computing lighting in a single pass instead of separately for every object) rely heavily on having the rendered objects pre-processed by the vertex pipeline.

So even if we can use a shader to specify what happens to, say, textures on the surface of an object, there is still dedicated hardware beyond GPGPU compute units that we can make use of right from pixel shader code. When specifying a texture lookup in a pixel shader, depending on the settings for that texture via the graphics API (e.g. OpenGL), a texture filter will be applied to smoothly interpolate it. This filtering happens in dedicated hardware. Even with GPUs with thousands of compute cores, implementing your own texture filter would kill performance, compared to using what the hardware already provides.

Now let’s look at what this new raytracing stuff does and what it doesn’t. Just like trying to render an entire scene using a pixel-shader, rendering an entire scene using raytracing shaders would be overkill, even for next-generation hardware.

The magic in real-time computer graphics comes from combining the available hardware resources, programmable or not, in efficient ways. With traditional vertex/fragment shader approaches, you use a vertex shader to resolve the 3D-space of your models into the 2D-space of the screen, send it off to the fixed function pipeline (“out” in modern GLSL), let the GPU do some magic and then pick it up again (“in”) in the fragment shader to process the textures in the area of your model on the screen. The dedicated GPU hardware has just done a lot of magic for you between your shader stages.

In a similar fashion, just as you traditionally don’t write texture filtering code for basic filtering, with raytracing shaders, you don’t write raytracing code. You specify what rays you want to send, what happens when a ray hits, and decide if you need to send any rays at all. Then some magic happens in the hardware and you have a result you can use further down the pipeline.

Next-gen graphics APIs introduce ray generation shaders that allow you to shoot rays, intersection shaders that get called on intersections and hit/miss shaders that get called when the ray is finished tracing. But whatever happens between those stages is fixed-function and that’s the point. With this hardware, we can finally solve the insanely expensive part of 3-D triangle intersections in 3D space right in dedicated hardware while specifying what to do with the data from the area we hit using the convenience of shaders.

But is it enough for rendering everything using ray tracing?

Most likely, no, except for some experimental games that heavily rely on procedural content generation and can be mostly processed within the GPU, minimizing memory bandwidth constraints.

Purely raytraced images just look extremely noisy unless there is an insane amount of samples rendered. The traditional approach with vertex/fragment pipelines, often in combination with hardware-antialiasing (like MSAA where the fragment shaders get only calculated once per pixel, while only depth and stencil values are supersampled) is just nice and fast. Raytracing everything, we would lose those beautiful opportunities we have to render smooth stuff fast, even with AI-de-noising techniques that might take 20% of the rendering of a raytraced scene.

There are no numbers for the RDNA2 architecture used in the next console generation yet, but let’s do some naïve math:

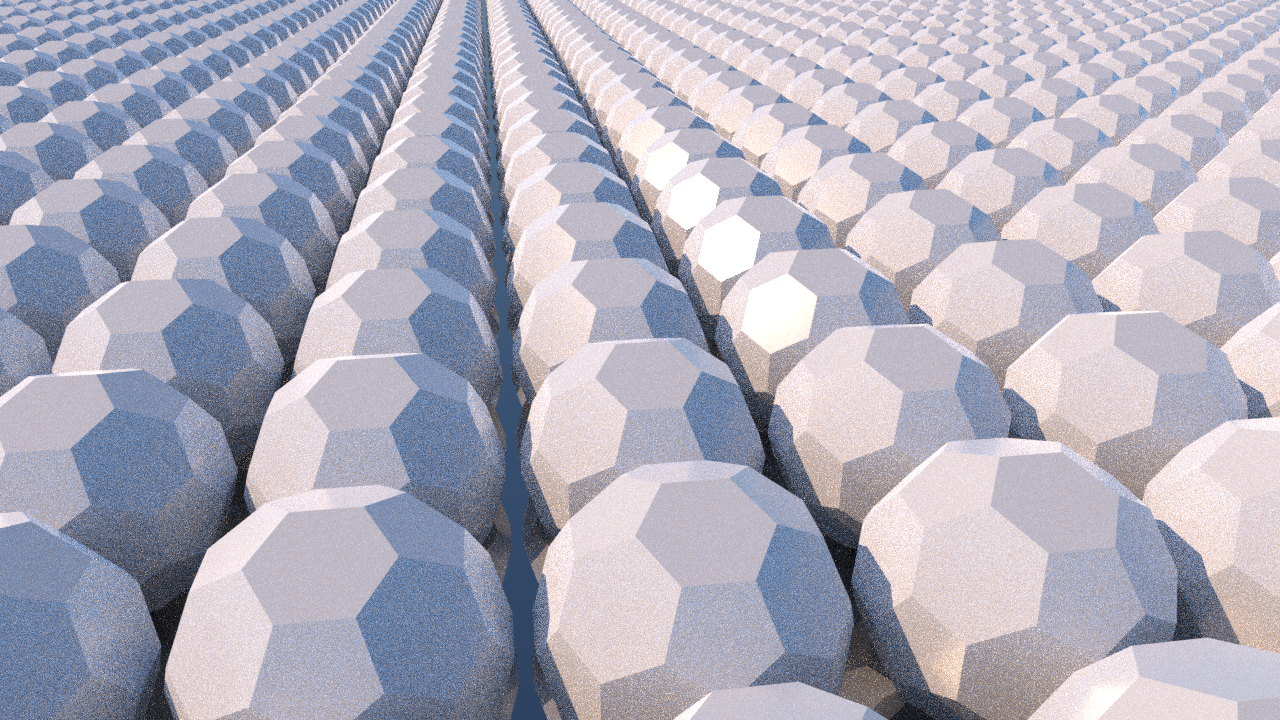

Let’s consider a 1280×720 image at 60 frames per second and 64 samples per pixel. That would still look pretty noisy. Here is an image rendered in a path tracer at 1280×720 with 64 samples per pixel, for which we would have to calculate around 3.5 billion samples per second, considering 60 frames per second.

Now on the hardware side, considering the XBOX one Series X, we’re talking about 56 compute units clocked at 1.83 ghz. But other than that, we don’t know much yet about the RDNA 2 architecture. But even assuming that we can process around 40 billion ray operations per second (which would be four times as fast as a GeForce 2080ti) and around 5 ray operations per sample, to support decent transparency and other effects, we’d have to up this to roughly 5 rays per sample, resulting in just enough headroom to render 1280×720 at 60fps.

Upping the resolution to 1920×1080, the GPU could only pump out roughly 30 fps while at 3840×2160, we’d have to live with a terrible 7 frames per second, simply to support all the rays we need to shoot to get a noisy image (which would then require further post-processing).

To summarize this, it doesn’t make sense to use raytracing as the sole rendering solution, but it needs to be used in conjunction with existing techniques.

|  |

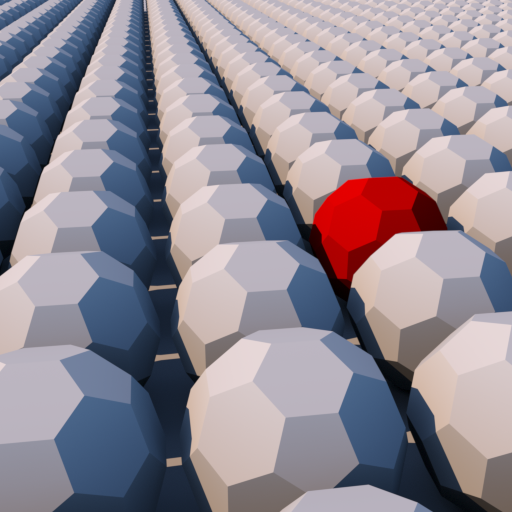

| Target Image | Raytraced at 16 spp |

|  |

| Only raytraced global illumination (16spp) | High-resolution image without GI (can be achieved in real-time using traditional rendering techniques) |

| |

| 16spp GI Only (filtered) | |

| |

| High-resolution traditional rendering with filtered RT composite |

The upcoming hardware releases are still amazing, because they’ll allow us to do better reflections, global illumination and effects like caustics. Because of the implementation as part of the programmable shading pipeline it could even be used for hardware physics (e.g. hit tests) and spatial sound processing. It is great to be used like traditional rasterization, but for objects in 3D space that aren’t in the view frustum, for example. But we’ll need to live with relatively low-resolution reflections that will still look great, combined with high-resolution normal maps and other techniques used in traditional rendering pipelines. We need to take the new hardware capabilities as what they are: a way to do a particular task really fast and we must also, like it’s always been in computer graphics, use other techniques, accelerated by different hardware, in creative ways and combine them to achieve the look we want with the required performance.

Leave a Reply